This fall we were faced with a challenge: keep the Music for All website up and running efficiently even during the busiest time of their year – the Bands of America Grand National Championships.

The reality of most websites is that they have little or no traffic. Sixty hits in a day is one hit every 24 minutes. This is why most websites can be hosted on shared hardware. In fact, the average website is hosted on a server shared with many other sites of different types. Our client Music For All was one such site and every year during the Bands of America championships, the shared host would routinely fall over and stop serving traffic.

Music For All serves as a central hub for music performance videos and results. Not only do thousands of visitors look to this site for real-time performances, but the people behind Music For All rely on their video-viewing subscription fees to operate their business. When their site does not work, they lose customers and ultimately income. This year, with only a few weeks before the band championships began, we were given the challenge of moving their site to a hosting provider that would not fall over.

We chose Amazon Web Services.

Decisions

We looked at a few options such as a virtual private host with some popular hosting providers. Some were cheap and lacked any useful detail and some were quite expensive but provided no guarantees. When brainstorming all of our options, we considered moving the site and hosting our own solution on Amazon Web Services (AWS).

The idea of using AWS is a little intimidating given the learning curve of how to interact with the service and all of the added maintenance of running your own environment. Luckily, we had experience with both, and were able to put together a rough concept, price it out, present it to the client and get approval within a day. Keeping Music for All up and running during the championship season was very important to the client, as they’d never stayed live during the season before, and this solution was a large promise on our end to pull off a failure-free season for them.

Planning

Now that we had a plan, we needed to map out how to execute and factor in the risks. We brought in our Joomla expert and talked through a few server layouts. We went with a simple solution of having a centralized database server with multiple web servers. Our plan was to scale out the web servers to whatever it would take to meet the load requirements. We knew we could get a good sized database and it would hopefully handle the load of many web servers. We weren’t entirely sure how to size it because we did not have a good sense of how the web and database performed within the current host.

Here is what we went with:

Web Server

- Instance Type: t2.medium

- vCPU: 2

- Memory: 4 GB

- Disks: Magnetic

Database Server

- Instance Type: m3.xlarge

- vCPU: 4

- Memory: 15 GB

- Disks: SSD

To get all of these web servers to work together, we decided on using Amazon’s Load Balancer which makes adding web servers relatively easy. Our biggest concern with the load balancer would be how Joomla would react in a load balanced situation and the setup of the site’s SSL certificate. We knew we could fall back on sticky session methodology for load balancing if needed to, but our preference was to allow any request from the user to be serviced by any web server. It was unfortunate, but to test the SSL certificate, we would have to wait for the site to go live and do some quick testing and heroics to make it work. This was a risk point in our plan.

One other requirement we needed to support was allowing site users to upload content through Joomla and coordinate having that content available on all web servers. We considered a few options to keep the web directory in sync. Ultimately, the easiest to setup and most reliable method was to create an NFS share on our DB server and mount it to each web server. Though this would force web request I/O to go over network and read from the database server, the networking within AWS from EC2 to EC2 is very fast. Also with the DB server being on SSD drives we felt we could afford the extra I/O.

Setup

We provisioned a database and web server in EC2 based on one of Ubuntu’s images. We chose Ubuntu based solely on previous experience working with it and its solid package management for getting the software we needed setup and keeping it updated. The database server has mysql installed with some minor tweaks to the configuration to take advantage of all of the available RAM. The client’s database was recovered to this server so we could test the setup. Additionally, we configured the NFS share on this server with a copy of the website.

For each web server, we installed Apache, PHP and the configuration necessary to run Joomla. We set up both a standard and secure web server configuration and pointed both to the NFS mounted file system. Changed the Joomla configuration to point to the separate Database server and tested it all.

Testing took a few rounds as we wanted to make sure our key concerns worked such as file uploads, CSS changes, Joomla plugin updates, and even that system reboots would not break anything within this new configuration. As soon as we had one working web server, we added the AWS load balancer. We were able to add the SSL Certificate to the load balancer making the web server configuration a little bit easier. We then tested with multiple web servers behind the load balancer and found success.

The next issue we ran into, which is unique to Amazon Web Services, is that you can only use a CName alias to point a nice URL to their generated DNS name that represents the load balancer. You cannot point to a load balancer IP as AWS can change the underlying IP at any time. This isn’t a problem if you alias www.yourdomain.com to the load balancer, but we also wanted the clients A-record domain to point to this. Amazon has a solution (or hack) to this by offering an A-record alias within their Route 53 service. This is not a standard DNS feature, but we wanted it, so we moved all DNS hosting to Amazon as well.

Monitoring

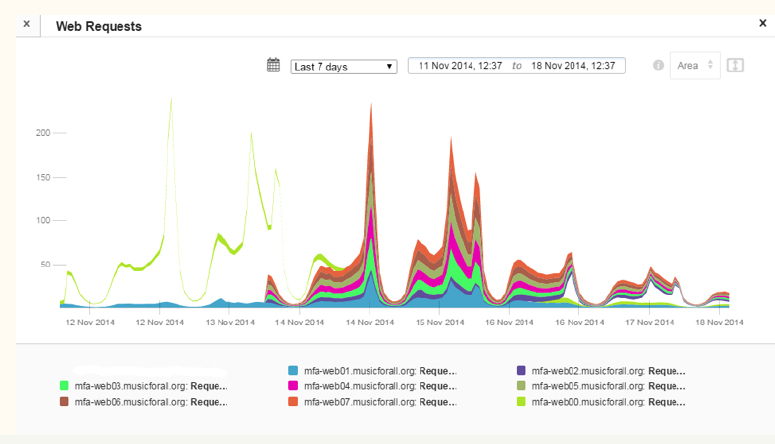

Two critical aspects of making sure the website did not crash as load hit it was to monitor for the effectiveness of each server and to give us feedback on when to launch another web server. Amazon provides some amount of monitoring through their CloudWatch product, but we wanted detailed metrics around the application components and alerting based on critical thresholds.

We certainly did not have the time to install a monitoring solution like Nagios due to its complexity. I have previous experience with using Server Density and knew I could set it up quickly, get the specific monitoring I needed for mysql and apache, have alerts sent directly to my phone, and use their external monitoring to test the website.

Since Server Density supports an ubuntu installation package, it was really as easy as setting up an account with them, running the ubuntu installer commands, making a single configuration change to input the key for the client,and enabling either the apache or mysql module I wanted. Within minutes I had Music for All metrics running on the Server Density site. Best of all, as we spun up new web servers, we could easily add them, and they had both the same level of monitoring with no additional setup and the global alerts applied to all new servers.

We set up a few external monitors for US connections, International connections, and one to check the content of the home page. We would use these for measuring site responsiveness and also to alert if the site was not displaying the proper page via Joomla.

The Big Night

We were lucky enough to have several weeks of increasing site traffic in our new environment. This gave us time to gauge how effective our strategy was. We ended up having to make one Apache configuration change to allow higher numbers of threads. In addition, we decided for the championship weekend to just go ahead and set up all eight web servers

We were quite happy with the results. At peak usage, we saw about 380 requests per second come through the application

Lessons Learned

After we successfully completed the big night for the client, we sat down as a team to discuss what went well and what didn’t. Based on this, we have put together notes for both the client and our team to review prior to next year’s championship. Some of the items we noted were:

- We should make use of the Amazon Auto Scaling Group to control the launching of web servers based on resource usage instead of manually launching new web servers.

- We should make use of the Server Density API to provision new web servers within the monitoring application.

- We were surprised to learn the Database server was not used very much with low CPU and RAM consumption. Next year, we could go with an instance half the size and cost.

- Since we had the website disk and the database disk on the same SSD array, we did not have good insight into where most of the IO was, but we suspect it was the website. Next time, we will separate the two so we can monitor each independently.

Overall, we consider this project a major success.